PREVIOUS POST IN THIS SERIES

Here is a stat to ponder if you are interested in how scientific knowledge is shared in our discipline and in the general health of the science of behavior: We have a LOT of behavior analysis journals — more than 20 by my last count.

Is that a sign of robust health in a thriving discipline? Or just too many journals?

Consider that, on the science front at least, we are not a huge discipline. Subtract out all of the BCBAs and BCaBAs and RBTs who are trained at the Masters level and below and who focus primarily on practice, and I estimate that we’re talking about a few hundred to maybe a thousand committed behavior analysis researchers, tops. If I’m right, that translates to around one journal for every 50 researchers (or fewer).

Which sounds to me like spreading the talent pretty thin.

What “Too Many” Journals Would Look Like

Now, if we did have too many journals, you’d expect to see several consequences:

- Because potential readers are divided into many specialized sub-communities, many journals would have a small subscriber base and therefore relatively few readers. [Library subscriptions help with this problem, but not all libraries can afford access to all journals, and researchers who work in applied setting may not have research-library privileges.]

- The citation impact of many journals would be low.

- Some journals would have a manuscript flow problem, with too few papers submitted to guarantee a regular publication schedule. As a result, you’d see journals resorting to frequent special issues and other gimmicks in an effort to bring in more submissions.

- The acceptance rate (% of submissions accepted for publication) would be relatively high for some journals.

- Articles of middling-to-poor quality would be published fairly often.

Subjective appraisal: The preceding pretty well describes the landscape of behavior analysis journals. Most of the hard evidence bearing on my predictions (subscription numbers, article acceptance rates) is proprietary and therefore not available to us, but I’ve been on the inside of enough journals in the past to tell you that small subscriber bases, sketchy manuscript flow, and unusually high acceptance rates are not as rare as we’d hope. And as a reader, I see a lot of papers that are methodologically suspect, poorly written, or of unclear importance.

Subjective appraisal: The preceding pretty well describes the landscape of behavior analysis journals. Most of the hard evidence bearing on my predictions (subscription numbers, article acceptance rates) is proprietary and therefore not available to us, but I’ve been on the inside of enough journals in the past to tell you that small subscriber bases, sketchy manuscript flow, and unusually high acceptance rates are not as rare as we’d hope. And as a reader, I see a lot of papers that are methodologically suspect, poorly written, or of unclear importance.

As for journal impact factor (which measures, in effect, the mean number of citations of a journal’s articles across a two-year window), well, the news isn’t great. Not all behavior analysis journals even have an impact factor, which means they are viewed as relatively unimportant by the services that calculate impact factors.

At least 16 of our journals do have an impact factor. The average for these journals is 2.05, by comparison to a mean of about 3.8 for journals across many disciplines and about 3.5 for 78 journals published by the American Psychological Association (APA). Of course, averages can be misleading when the underlying distribution is skewed. That’s true for impact factor, as there are a relative few very-high-impact journals, with a long tail stretching out toward lower impact.

Therefore, let’s size up behavior analysis journals in a different way. The median impact factor for journals overall is 2.7; for APA journals it’s 2.6. In rough terms, then, a behavior analysis journal with an impact factor of about 2.6 or higher falls into the top half of comparison journals. Sadly, in 2022, this was true for only four of our journals (Journal of Contextual Behavioral Science, Behavior Modification, Journal of the Experimental Analysis of Behavior, and Journal of Applied Behavior Analysis). By comparison, 38 (49%) of the APA journals fell into the top half of journals overall.

A Modest Proposal

You can decide for yourself whether there’s really a problem here, but if you agree with me that we have too many journals, then the question is what should be done about this. To me the answer seems obvious: If we were to reduce the number of journals, many of the problems mentioned above would go away. The number of manuscripts available to each journal would go up. Because journals can afford to be pickier, the quality of published articles would increase. Because of this, and with fewer journals to keep track of, readership and citation of the typical article would presumably increase.

Here’s a way to visualize what’s possible through journal consolidation. In mainstream Psychology there are several journals with independently edited topical sections. Articles appear in each section as they are accepted, so not every section necessarily has to be represented in each issue — thus, manuscript flow within a section is not a pressing concern. Because there are a lot of sections, the journals subsume the interests of a broad range of authors and thus have the manuscript flow to support up to 12 issues a year. The variety of sections also appeals to a broad range of readers, thus assuring that the journal isn’t ignored.

So… picture an “Omnibus Journal of Behavior Analysis” with separately edited sections on a variety of topics currently addressed in our discipline’s niche journals (e.g. education, behavior in organizations, verbal behavior, social justice issues, disability concerns, and more). Add in whatever other topics you think merit a section (e.g., behavioral economics), and include a “General” section for whatever doesn’t fit elsewhere. The result could be a powerhouse journal, one in which just about every behavior analyst, regardless of specialty area, can find something of interest.

Fixed Constraints

But before you get too excited, remember that this probably is never going to happen, for at least three reasons.

- Our journals are owned and published by various organizations and businesses which are never going to collaborate just to create a better journal. Profits and turf issues and other non-scholarly considerations will get in the way.

- Some scholars will adamantly oppose consolidation. Some believe that highly specialized journals are necessary as a sort of “incubator” to allow important areas of research to grow. Some simply like being a big fish in a little pond.

- Let’s not overlook that we authors benefit when journals get too few submissions. This makes it easier for us to publish our stuff, and THAT fuels the hunt for promotion, tenure, raises, professional visibility, etc.

Given these considerations, it’s hard to imagine who is going to line up to tackle the difficult work of figuring out how to fit the same amount of science into fewer journals.

One More Thing: Qualitatively Different Audiences

There’s actually a fourth consideration, which is that different journals can serve different functions. I’ve mentioned measuring journal “quality” via scholarly impact, that is, the influence that a journal’s article exerts over other researchers. But there’s a different kind of influence that can be equally important: dissemination impact, in which journal articles inform people who are not scientists about behavior analysis (for more on the concept, see here and here). And the two kinds of influence tend to be pretty independent, in that scholarly impact and dissemination impact don’t correlate strongly on an article-by-article basis.

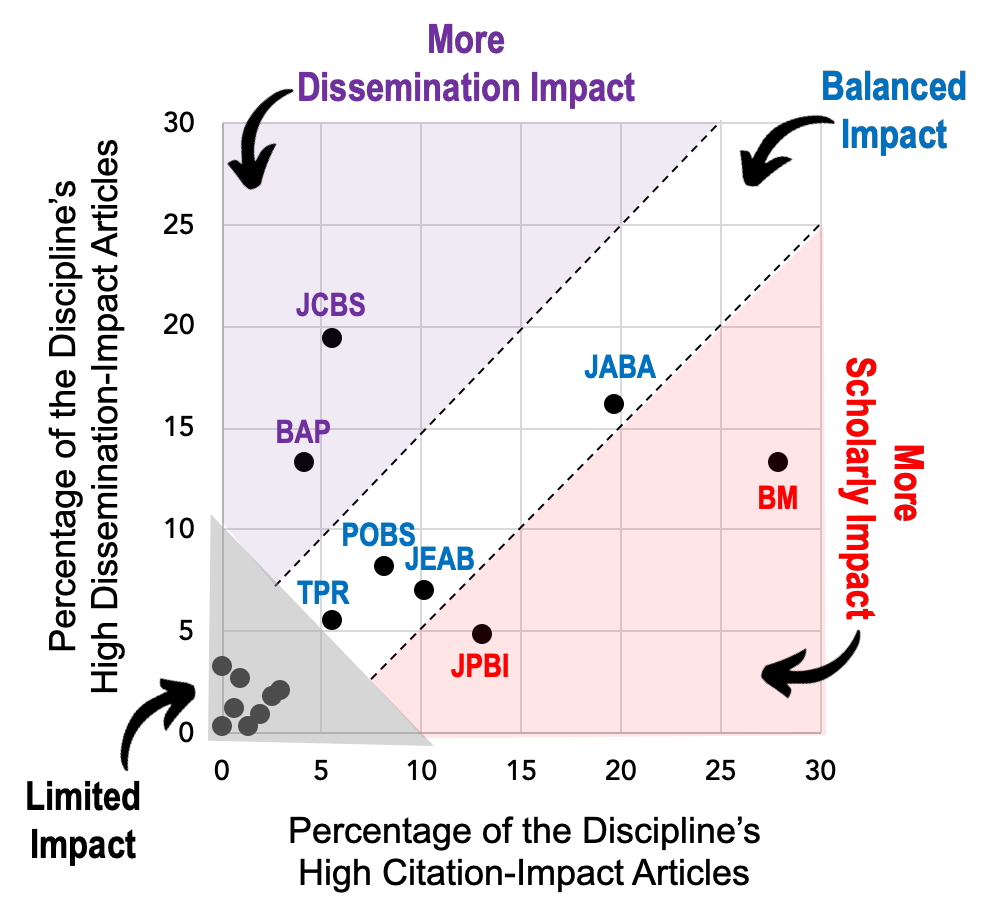

It’s possible that, in addition to thinking about our journals in terms of topical coverage, we should consider what type of “influence footprint” each has. Here’s a sketch of how to approach this problem. In previous posts I described behavior analysis articles from the 21st Century so far that have had the greatest scholarly impact (measured through citation counts) versus dissemination impact (measured using the Altmetric Attention Score, which reflects attention in non-scholarly sources like news stories, social media, and blogs). In each case I created a pool of about 300 of the articles with the highest scores; these data can be used to “diagnose” what some of our journals are best at.

The figure below summarizes two outcomes for each of 16 behavior analysis journals: its percentage of the most-cited 21st Century articles, and its percentage of the 21st century articles with highest Attention Scores. This display provides no objective definition of what’s “good enough,” only a way to compare across journals.

I’ve divided the graph into four zones.

- Purple: Journals with high dissemination impact relative to citation impact (Behavior Analysis in Practice, Journal of Contextual Behavioral Science).

- Red: Journals with high citation impact relative to dissemination impact (Behavior Modification, Journal of Positive Behavior Interventions).

- White: Journals with relatively balanced levels of dissemination and citation impact (e.g., Journal of Applied Behavior Analysis).

- Gray: Journals that produce few articles with either high citation rates or high Attention Scores.

These zones suggest that journals with a similar topical focus aren’t necessarily “the same.” For instance, both Journal of Contextual Behavioral Science and Behavior Modification publish papers related to psychotherapy, but so far in the 21st Century they seem to have reached different kinds of audiences (more “everyday people” in the former case, more scientists in the latter). Similarly, Journal of Applied Behavior Analysis and Behavior Analysis in Practice are very different kinds of journals, despite their nominal topical similarity. If we deem this diversity of reach healthy for the discipline, then you’d need to think carefully about my proposal for merging journals.

At the least, the graph reminds us that it’s possible to consult objective “quality” indicators to guide decisions about how our journals are performing. For instance, notice the cluster of journals that, in the 21st Century, have produced few high-impact articles of either sort. Were I to explore creating an omnibus behavior analysis journal, these would be the first I’d examine for possible consolidation. No disrespect intended, but these are journals that, at least as portrayed by citation and altmetric data, have a limited audience.

Conclusion

In the end, of course, this discussion is strictly a simulation. The world of behavior analysis journals has been created piecemeal across many decades by many organizations and individuals, sometimes working at cross purposes to one another. It makes complete sense that today’s patchwork quilt of journals doesn’t make sense. And it would be folly to think that there’s any simple solution. That said, if we don’t occasionally take stock of our scientific infrastructure, we have zero chance of changing this chaotic status quo.

Postscript: Good People in a Bad Situation

I want to be 100% clear that I’m not casting aspersions at any of the people who bust their butts every day to keep behavior analysis journals operating. As a part of many editorial teams over the years, I’ve been honored to work with many colleagues who gave this work their absolute best. The problem is not with the leadership of individual journals. The problem is that these people who are given an impossible task. There is only so much good science to publish, and by creating too many journals we’ve crafted a zero-sum situation, a scientific Hunger Games in which one journal can prosper only at another’s expense. For a relatively small and new discipline, I’m just not sure that’s healthy.