PREVIOUS POSTS IN THIS SERIES

- Pay No Attention to That Self Behind the Curtain, OR the Least Behavioristic Thing About Behavior Analysts

- There Are Too Many Behavior Analysis Journals. I Can Prove It.

- Dead Men, Sexy Blondes, and the Confirmation Bias, OR The Illogic of the “Conceptual Article”

- More Concerns About Equity and Inclusion in the Science of Behavior

Disciplines are supposed to move forward.

For instance, somewhere — apologies, I forget where — Steve Hayes wrote that no self-respecting discipline should be using textbooks that are 50+ years old in their graduate courses (I’ll leave it to you to guess which book he was specifically referencing). And Steve was right. Successful disciplines build on, but also move beyond, strong foundations.

In biology, there was once considerable debate about the validity of germ theory (the notion that teensy things too small to see cause disease). That got settled, empirically speaking, and biology has moved on to more sophisticated problems. In fact, germ theory is so accepted that seminal work establishing its validity is no longer cited except, perhaps, in historical treatises or introductory textbooks.

In disciplines that are moving forward, what once counted as “breakthroughs” eventually become common knowledge.

If a discipline’s intellectual touchstones remain static — if foundational concepts don’t, like germ theory, gradually fade into the background to make room for more sophisticated problems — well, hard questions arise. Is the discipline moving forward? Or is it ruminating on the familiar, that is, simply slicing up the same phenomena into ever more minute portions?

This got me to wondering about the extent to which applied behavior analysis has moved forward.

For context, I’m going to share some bird’s-eye-view data (all pulled from Web of Science in January, 2024) that perhaps do a better job of framing the question than of answering it. This is mostly a pictorial essay, in three parts.

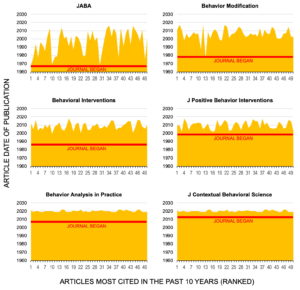

(1) The figure below shows, for six prominent applied journals, the publication date of its 50 articles that were most influential (most cited) in the last 10 years (thus, for journals citing a lot of relatively recent stuff, we see a lot of yellow, and for journals focusing on older sources, we see less yellow). Clear differences across journals are apparent, with Journal of Applied Behavior Analysis (JABA) particularly standing out for emphasizing older sources. Contrast this with the newer articles being cited from Behavior Analysis in Practice (BAP) and Journal of Contextual Behavioral Science (JCBS).

For each journal, a horizontal red line shows the year the journal began publication. Considering when the various journals were founded, it appears that the older the journal the older its most impactful sources. One thing to consider here is that behavioral journals tend to do a lot of self-citing (many of them are their own most common source of citations; see the Postscript). With this in mind, it’s logical that older journals would self-cite older stuff, because they HAVE older stuff to cite. However, since self-citation is usually less than 20% of a journal’s total citations, this can’t fully account for what we see in the figure.

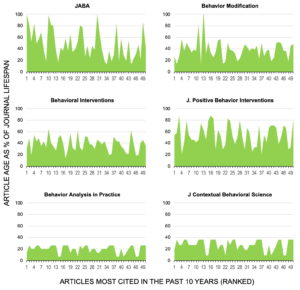

(2) To try to take journal lifespan into better account, the figure below shows the age of most-cited articles (still during the last 10 years) as a percentage of journal age (here, we see more green as the most-impactful sources become older). Again JABA is among the journals with the oldest articles being cited, and again BAP and JCBS stand out for attention earned by newer sources. Thus, even within the context of their own differential histories, the journals are being cited differently. A curiosity here is Journal of Positive Behavior Interventions (JPBI), whose most cited sources often are from its early days.

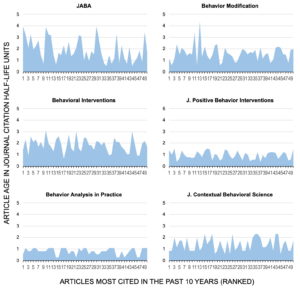

(3) There’s one more wrinkle to introduce. It has long been understood that journals differ in how quickly citations pile up for their articles. For some journals, you can tell within a few years what articles will be heavily cited. For others, you might need to wait a decade or more to get a clear picture. The reasons for this are unclear, but for the sake of illustration consider the following hypothetical. Imagine that each applied behavioral journal represents a unique pool of authors with unique habits when it comes to the speed at which new ideas are embraced. Imagine that the only citations of each journal’s articles come from other articles in the same journal (all self-citations; see the Postscript). Among the consequences of this would be different amounts of time for citations to build up for articles in the different journals. Which is actually the case. Citation half-life is the median amount of time it takes for citations to accumulate in a given journal. According to Journal Citation Reports, citation half-lives are relatively long for JABA (13.9 years), Behavior Modification (10.5 years), and JPBI (9.4 years). It’s especially short for BAP (3.7 years) and JCBS (3.5 years). In between falls Behavioral Interventions (7.7 years).

To adjust for differential citation-accumulation tendencies, the figure below shows the age of each journal’s most cited articles, displayed in citation half-life units (so, article age divided by journal citation half-life, yielding more blue for relatively older sources). Once again, JABA skews older, while BAP and JCBI skew younger.

What’s this all mean? Specifically, if the articles making a contemporary splash from a given journal are fairly old, what does this tell us? In the context of my germ theory example, this could signify stagnation, in which people continue focusing working on the same problems, using the same theoretical ideas, that they were decades ago. This was Steve Hayes’ concern.

However, in our age of replication crisis, when so many scientific houses seem to be built on sand, perhaps a continuing reliance on decades-old sources should be comforting, suggesting that our science rests on intellectual stone(?).

Because the present data show considerable differences between journals, there’s no simple, discipline-wide answer. Recall my hypothetical from above, in which each journal represents a unique, independent verbal community. If this is our reality, then the different journals can’t really be considered to represent one discipline, can they? For instance, in the last 10 years, there was negligible cross-citing between JABA and JCBS (according to Web of Science; neither is in the top 25 of journals citing the other’s articles). In this empirical sense, JABA-style ABA and JCBs-style contextual science are, in an objective sense, separate disciplines.

We might expect a more harmonious relationship between JABA and BAP (whose mission once seemed so similar to JABA’s that it was dismissively branded “JABA Junior“). Each is the other journal’s #2 most-common source of citations. Yet if these two journals represent strongly overlapping communities, why are their citation patterns so different? Perhaps the difference traces to BAP being a practice-focused journal and JABA being more of an exercise in bench-to-bedside translational science. For various reasons, perhaps the embrace of new ideas proceeds at a different pace for the two enterprises? It’s interesting to note that two other journals whose articles get cited relatively rapidly (when article age is measured in citation half-life units), JCBS and JPBI, also have a strong practice focus (for instance, I’ve written previously about JCBS’s strong dissemination impact; see here and here).

So, what do YOU think about all of this?

Maybe you don’t think about it 🙂. After all, most people don’t get excited about bibliometric information. But I urge you to not be complacent. As I alluded at the outset, there’s a fine disciplinary line between treading old water and swimming to new destinations. We behavior analysts love our traditions, which is fine, but preserving tradition should never be a discipline’s reason to exist. If reliance on fairly ancient sources, in at least some of our journals, is a sign that we are stagnating, then this is cause for alarm. If some journal communities do a better job than others of forging ahead, we should see how they make this happen in order to maximize progress elsewhere. I’m not saying that the data I shared provide firm evidence of anything, only that they suggest a need to talk about where — if at all — behavior analysis is stuck, and what to do about that.

I’m really interested in your reactions. Use the Comments box below!

Postscript: Self-Citation Rates

As a general rule, journals are expected to have low self-citation rates (meaning most citations come from other journals). The underlying logic is that citation “impact” is most meaningful if the influence extends beyond the limited pool of scientists who participate in a given journal. In other words, citation impact shouldn’t be too incestuous. The mean self-citation rate for all journals appears to be around 10%, with 20% considered high. Behavior analysis journals have a history of high self-citation rates, which may not be surprising when you consider our tendency to self-isolate from other disciplines. In 2005, Elliot and colleagues reported self-citation rates over 30% for both JABA and Journal of the Experimental Analysis of Behavior. In 2021, self-citation rates exceeded 20% for four of the journals examined here: JABA, BAP, JCBS, and Behavioral Interventions. The two outliers, with only about 2% self-citations, were Behavior Modification and JPBI.